3-3 Ensemble Of Tree

1. Ensemble(앙상블)

- An ensemble is a group of people who work or perform together.

- 앙상블(ensemble)은 전체적인 어울림이나 통일. ‘조화’로 순화한다는 의미의 프랑스어

- 음악에서 2인 이상이 하는 노래나 연주를 말하며,

- 뮤지컬에서 주, 조연 배우들 뒤에서 화음을 넣고 춤을 추고 노래를 부르면서 분위기를 돋구는 역할

2. Tree의 Ensemble

- Decision Tree Algorithm

- Ensemble methods are meta-algorithms that conbine several machine learning techniques into one predictive model

- in order to decrease variance(bagging) and bias(boosting)

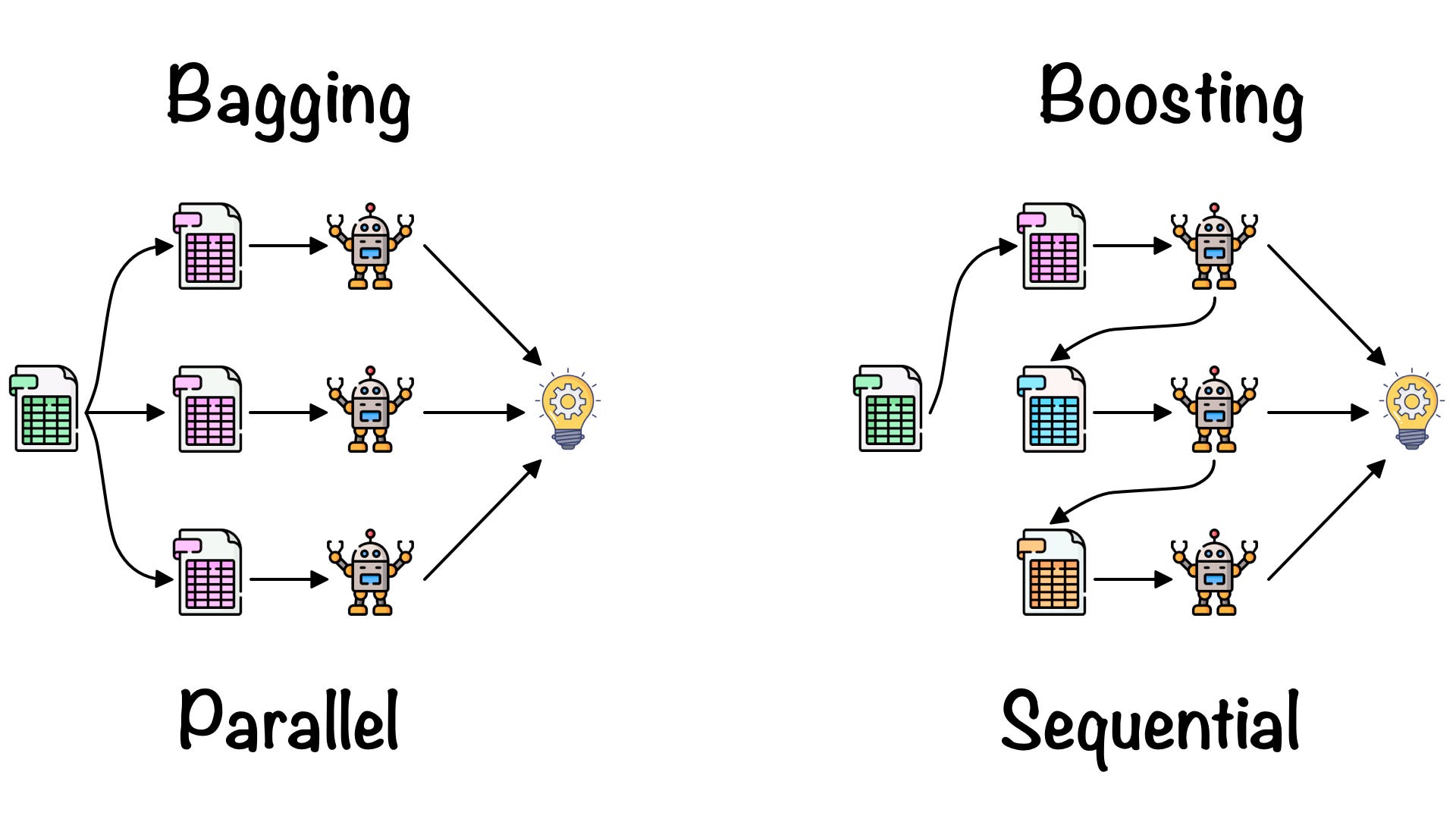

Ensemble methods can be divided into two groups

- Parallel ensemble methods where the base learners are generated parallel (e.g Random Forest)

- exploit independence between the base learners

- since the error can be reduced dramatically by averaging

- Sequential ensemble methods where the base learners are generated sequentially (e.g AdaBoost)

- exploit the dependence between the base learners

- The overall performance can be boosted by weighing previously mislabeld examples with higher weight

3. Bagging (→Decrease Variance)

- Bootstrap 방식의 앙상블 학습법

- Bootstrap aggregating

- Bagging은 분산을 줄이고 overfitting을 피하도록 해줌

- Decision Tree나 Random Forest에 적용되는 것이 일반적

Bootstrap

- 첫 번째 차례에서 파란 공이 뽑힐 확률 = 1/3

- 두 번째 차례에서 파란 공이 뽑힐 확률 = 1/3

- 세 번째 차례에서 파란 공이 뽑힐 확률 = 1/3

- 9개 공이 모두 파란색일 수 있다

개별 분류기보다 성능이 뛰어난 이유

- 이항분포의 확률 질량 함수

- 단일 Tree의 Binary Class 확률에 대해 N개의 Tree로 확장 시켰을 때의 오차율

.png)

- 따라서 앙상블의 에러 확률은 개별 분류기 보다 항상 좋다.

- 다만 개별 분류기의 에러율이 0.5이면서 앙상블할 분류기가 짝수 일 때, 예측이 반반으로 나뉘면 에러로 취급된다.

- 따라서 개별 분류기가 무작위 추측(P(E)=0.5)보다는 성능이 좋아야 한다.

A Commonly used class of ensemble algorithms are forest of randomized trees

- In random forests, each tree in the ensemble is built from a sample drawn with replacement (i.e a bootstrap sample) from the traning set.

- In addition, instead of using all the features, a random subset of features is selected, further randomizing the tree

- As a result, the bias of the forest increases slightly, but due to the averaging of less correlated trees (상관관계가 적은 tree의 평균화 때문에), its variance decreases, resulting in an overall better

- 각 Tree의 Prediction을 모아 다수결 투표(Majority voting)로 예측

4. Boosting (→Decrease Bias)

Boosting

- the hypothesis boosting

- Reduce Bias

- Improve Performance

Compare with Bagging

- 공통점

- 1개의 Strong Learner가 아닌 n개의 Weak Learner Model의 Ensemble 방식

- Weak Learner를 다수결 투표(Majority voting)로 연결

- 차이점

- 각 모델을 만들기 위한 sampling에서 중복을 허용하지 않음 (Bootstrap 방식 X)

- 상호 의존적인 개별 모델

- 이전 Model의 영향을 받아서 Error에 더 큰 가중치 부여

AdaBoost

Gradienc Boosting

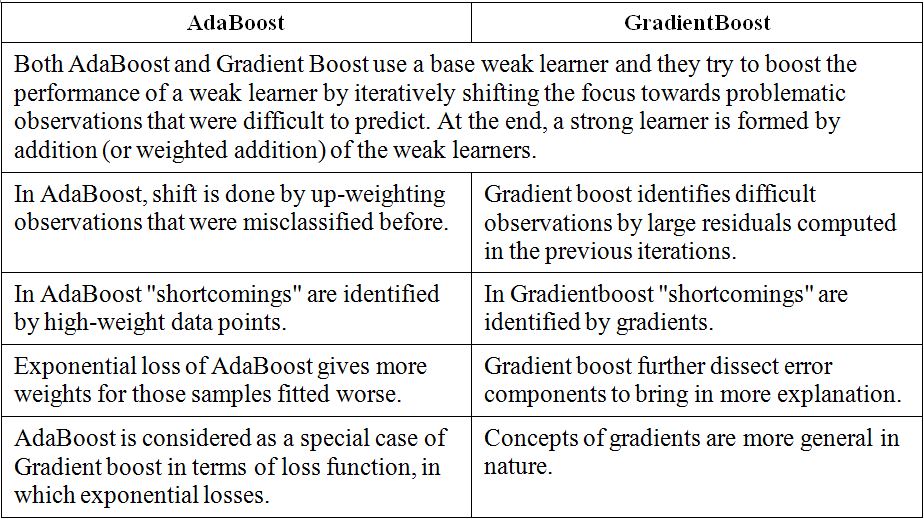

- AdaBoost와 Gradient Boosting은 전반적인 개념이 같다.

- Weak (깊이가 1인 Decision Tree와 같은) Learner를 Boosting하여 Strong Model을 만든다.

XGBoost

LightGBM

5. Quiz

- Variance를 낮추기 위한 Ensemble 방식은? 🤡

(a) Bagging (b) Gradienc Descent

(c) Boosting (d) Hyperparameter Tuning