Prepreperation

- train set을 아래와 같이 세팅하고 훈련합니다

Train Set

import numpy as np perch_length = np.array( [8.4, 13.7, 15.0, 16.2, 17.4, 18.0, 18.7, 19.0, 19.6, 20.0, 21.0, 21.0, 21.0, 21.3, 22.0, 22.0, 22.0, 22.0, 22.0, 22.5, 22.5, 22.7, 23.0, 23.5, 24.0, 24.0, 24.6, 25.0, 25.6, 26.5, 27.3, 27.5, 27.5, 27.5, 28.0, 28.7, 30.0, 32.8, 34.5, 35.0, 36.5, 36.0, 37.0, 37.0, 39.0, 39.0, 39.0, 40.0, 40.0, 40.0, 40.0, 42.0, 43.0, 43.0, 43.5, 44.0] ) perch_weight = np.array( [5.9, 32.0, 40.0, 51.5, 70.0, 100.0, 78.0, 80.0, 85.0, 85.0, 110.0, 115.0, 125.0, 130.0, 120.0, 120.0, 130.0, 135.0, 110.0, 130.0, 150.0, 145.0, 150.0, 170.0, 225.0, 145.0, 188.0, 180.0, 197.0, 218.0, 300.0, 260.0, 265.0, 250.0, 250.0, 300.0, 320.0, 514.0, 556.0, 840.0, 685.0, 700.0, 700.0, 690.0, 900.0, 650.0, 820.0, 850.0, 900.0, 1015.0, 820.0, 1100.0, 1000.0, 1100.0, 1000.0, 1000.0] )

Train Set과 Test Set으로 나누기

from sklearn.model_selection import train_test_split # 훈련 세트와 테스트 세트로 나누기 train_input, test_input, train_target, test_target = train_test_split( perch_length, perch_weight, random_state=42) # 훈련 세트와 테스트 세트를 2차원 배열로 변경 train_input = train_input.reshape(-1, 1) test_input = test_input.reshape(-1, 1)

Train

from sklearn.neighbors import KNeighborsRegressor knr = KNeighborsRegressor(n_neighbors=3) # k-최근접 이웃 회귀 모델을 훈련합니다 knr.fit(train_input, train_target)score

print(knr.score(test_input,test_target))output : 0.992809406101064

k-NN의 한계

- k-NN 모델을 이용하여 길이가 50cm인 농어의 무게 예측

prediction

print(knr.predict([[50]]))output : 1033.33333333

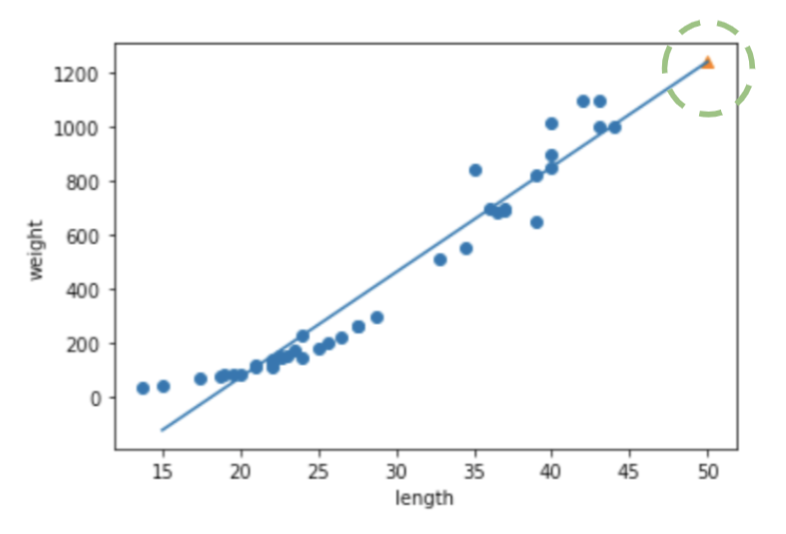

- Train Set과 예측값 시각화

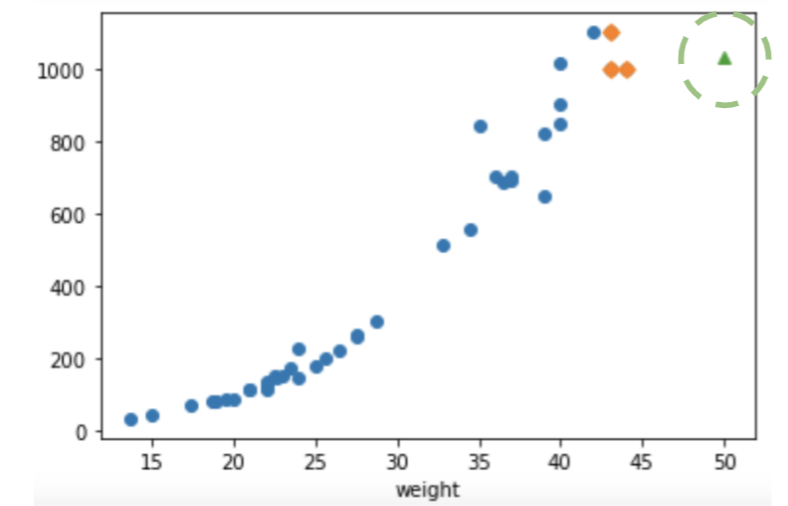

# 50cm 농어의 이웃을 구합니다 distances, indexes = knr.kneighbors([[50]]) # 훈련 세트의 산점도를 그립니다 plt.scatter(train_input, train_target) # 훈련 세트 중에서 이웃 샘플만 다시 그립니다 plt.scatter(train_input[indexes], train_target[indexes], marker='D') # 50cm 농어 데이터 plt.scatter(50, 1033, marker='^') plt.xlabel('length') plt.ylabel('weight') plt.show()output :

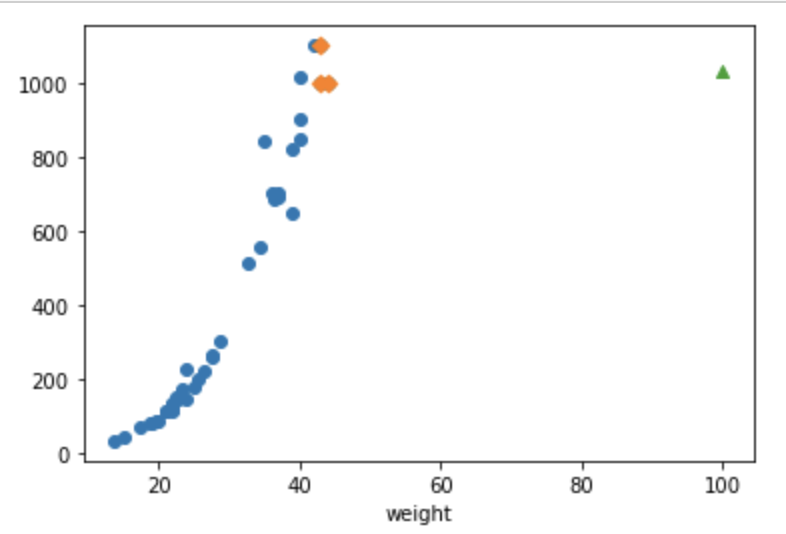

- 위와 같은 방법으로 k-NN 모델을 이용하여 길이가 100cm인 농어의 무게 예측

k-NN의 한계

- k-NN(k-최근접 알고리즘) 은 input 값과 가장 최근접하는 이웃 n개의 평균 함으로써 예측하는 모델임(n = n_neighbor)

- rain set의 범위를 벗어난 예측을 하지 못함

Linear Regression (선형 회귀)

- 위와 같은 한계점을 극복할 수 있는 알고리즘으로 선형 함수를 이용하여(직선) 예측을 수행

- 간단하며 직관적이며 성능이 뛰어남

Train

from sklearn.linear_model import LinearRegression lr = LinearRegression() # 선형 회귀 모델 훈련 lr.fit(train_input, train_target)output : LinearRegression()

prediction

# 50cm 농어에 대한 예측 print(lr.predict([[50]]))output : [1241.83860323]

- 직선(y = ax + b)을 그리기 위해서는 기울기와 절편, 즉 a와 b값이 있어야함

- 선형 회귀 분석의 목적 -> 우리가 가진 데이터를 가장 잘 설명할 수 있는 a, b값을 찾는 것

- LinearRegression 클래스가 찾은 a, b값은 lr 객체의 coef_와 intercept_에 저장되어 있음

LinearRegression

print(lr.coef_, lr.intercept_)output.: [39.01714496] -709.0186449535477

- 찾은 a,b값을 이용하여 선형함수를 그린다

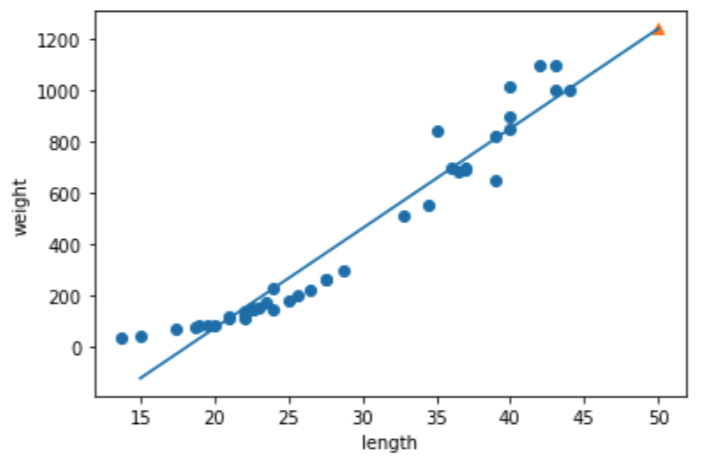

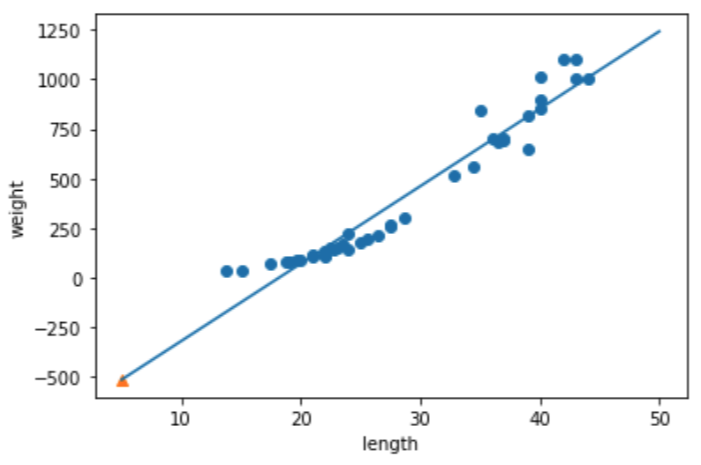

# 훈련 세트의 산점도를 그립니다 plt.scatter(train_input, train_target)output :

# 15에서 50까지 1차 방정식 그래프를 그립니다 plt.plot([15, 50], [15*lr.coef_+lr.intercept_, 50*lr.coef_+lr.intercept_]) # 50cm 농어 데이터 plt.scatter(50, 1241.8, marker='^') plt.xlabel('length') plt.ylabel('weight') plt.show()output :

- lr score 확인

print(lr.score(train_input, train_target)) print(lr.score(test_input , test_target))output :

0.9398463339976041

0.8247503123313559

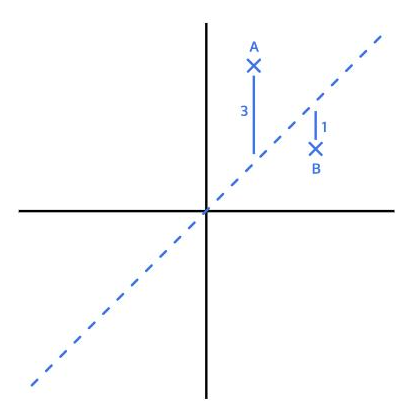

Loss - 선형 회귀에서 발생하는 오차, 손실 (added by emma)

- 데이터들을 선으로 표현하는 것은 실제 데이터와 약간의 차이가 발생

- 오차, 손실(Loss)

- 아래 그림을 보면 A는 3, B 는 1만큼의 손실이 발생함

- 엄밀히 보면 +, -를 고려하지 않고 이야기 한 것임

- 선과 실제 데이터 사이에 얼마나 오차가 있는지 구하려면 양수,음수 관계없이 동일하게 반영되도록

- 모든 손실에 제곱을 해주는게 좋음 (위 그림의 오차를 제곱값으로 설명하면 A는 9, B는 1이라고 볼 수 있음)

- 이러한 방식으로 손실을 구하는 걸 평균 제곱 오차(mean squared error, MSE)

- 손실을 구할때 가장 널리 쓰임

- 제곱하지 않고 절대값으로 평균을 구하는 평균 절대 오차(mean absolute error, MAE)

- MSE와 MAE를 절충한 후버 손실(Huber loss), 1-MSE/VAR으로 구하는 결정 계수(coefficient of determination) 등이 있음

- 결국 선형 회귀 모델의 목표 : 모든 데이터로부터 나타나는 오차의 평균을 최소화할 수 있는 최적의 기울기와 절편을 찾는 것

- 손실을 최소화 하기 위한 방법 : 경사하강법 (Gradient Descent)

Linear Regression 의 한계

- 아래 그림과 같이 lr을 사용하여 길이가 5cm인 농어를 예측했을 경우 무게가 음수값이 나옴

- 이는 현실에서는 나올 수 없는 경우임

Polynomial Regression (다항식 회귀)

- 이러한 한계점을 극복하고 곡선으로 선형을 그려, 보다 최적화된 알고리즘을 찾을 수 있음

-

이차함수를 이용 (y = ax^2 + bx + c)

- train set 생성

넘파이를 이용하여 x의 제곱값과 x값으로 train set과 test set 생성

train_poly = np.column_stack((train_input ** 2, train_input)) test_poly = np.column_stack((test_input ** 2, test_input)) print(train_poly.shape, test_poly.shape)output : (42, 2) (14, 2)

- train_poly만 찍어보면 아래와 같이 array가 생성됨

train_polyoutput:

array([[ 384.16, 19.6 ], [ 484. , 22. ], [ 349.69, 18.7 ], [ 302.76, 17.4 ], [1296. , 36. ], [ 625. , 25. ], [1600. , 40. ], [1521. , 39. ], [1849. , 43. ], [ 484. , 22. ], [ 400. , 20. ], [ 484. , 22. ], [ 576. , 24. ], [ 756.25, 27.5 ], [1849. , 43. ], [1600. , 40. ], [ 576. , 24. ] …

- 길이가 50cm인 농어의 무게 예측

prediction

lr = LinearRegression() lr.fit(train_poly, train_target) print(lr.predict([[50**2, 50]]))output : [1573.98423528]

- y = ax^2 + bx + c 와 같은 곡선함수를 만들기 위하여, LinearRegression 클래스 이용해서 a,b,c 값을 찾을 수 있음

print(lr.coef_, lr.intercept_)output : [ 1.01433211 -21.55792498] 116.0502107827827

- 찾은 a,b,c 값을 이용하여 다항함수를 그린다

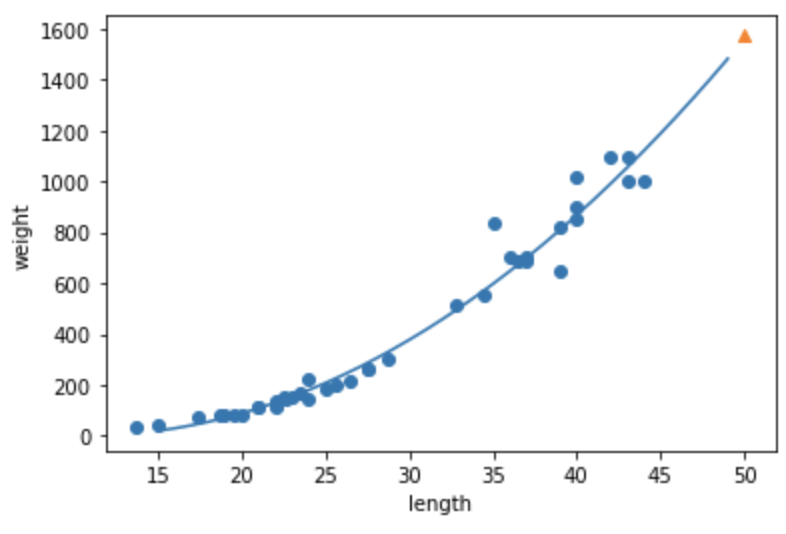

# 구간별 직선을 그리기 위해 15에서 49까지 정수 배열을 만듭니다 point = np.arange(15, 50) # 훈련 세트의 산점도를 그립니다 plt.scatter(train_input, train_target) # 15에서 49까지 2차 방정식 그래프를 그립니다 plt.plot(point, 1.01*point**2 - 21.6*point + 116.05) # 50cm 농어 데이터 plt.scatter([50], [1574], marker='^') plt.xlabel('length') plt.ylabel('weight') plt.show()output :